T5

Official

Summary

We explore the landscape of transfer learning techniques for NLP by introducing a unified framework that converts all text-based language problems into a text-to-text format. Modern techniques for transfer learning in NLP often pre-train using unsupervised learning on unlabeled data. We leverage a unified approach to transfer learning that allows us to systematically study different approaches.

The basic idea underlying our work is to treat every text processing problem as a “text-to-text” problem. This framework allows us to directly apply the same model, objective, training procedure, and decoding process to every task, including English-based NLP problems, question answering, document summarization, sentiment classification, etc.

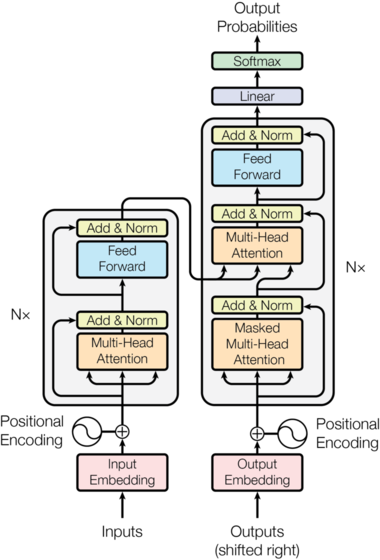

Early results on transfer learning for NLP leveraged recurrent neural networks, but it has recently become more common to use models based on the “Transformer” architecture. Due to its increasing ubiquity, all of the models we study are based on it.

http://nlp.seas.harvard.edu/2018/04/03/attention.html

http://nlp.seas.harvard.edu/2018/04/03/attention.html

Architecture

First, an input sequence of tokens is mapped to a sequence of embeddings, which is then passed into the encoder. The encoder consists of a stack of “blocks”, each of which comprises two sub-components: a self-attention layer followed by a small feed-forward network. We use a simplified version of input normalization (rescaling). A residual skip connection adds each sub-component’s input to its output. Dropout is applied within the feed-forward network, on the skip connection, on the attention weights and at the input and output of the entire stack.

The decoder is similar in structure to the encoder except that it includes a standard attention mechanism after each self-attention layer that attends to the output of the encoder. The self-attention mechanism in the decoder also uses a form of auto-regressive or casual self-attention which only allows the model to attend past outputs.

The output of the final decoder block is fed into a dense layer with a softmax output, whose weights are shared with the input embedding matrix. All attention mechanisms in the Transformer are split up into independent “heads” whose outputs are concatenated before being further processed.

Instead of using a fixed embedding for each position, relative position embeddings produce a different learned embedding according to the offset between the “key” and “query” being compared in the self-attention mechanism.

We use a simplified form of position embeddings where each “embedding” is simply a scalar that is added to the corresponding logit used for computing the attention weights. For efficiency, we also share the position embedding parameters across all layers in our model, though within a given layer each attention head uses a different learned position embedding.

Dataset (C4)

We used the following heuristics for cleaning up Common Crawl’s web-extracted text:

- only retained lines ending in a terminal punctuation mark

- discard pages with fewer than 5 sentences and only retained lines that contained at least 3 words

- removed any page that contained any word on list of dirty, naughty, obscene or otherwise bad words

- removed any line with the word Javascript

- removed any page where the phrase “lorem ipsum” appeared

- removed any pages that contained a curly bracket

- discarded all but one of any three-sentence span occurring more than once in the dataset

- used langdetect to filter out any pages not classified as English with a probability of at least 0.09

https://pypi.org/project/langdetect/

https://pypi.org/project/langdetect/

https://www.tensorflow.org/datasets/catalog/c4

https://www.tensorflow.org/datasets/catalog/c4

Downstream Tasks

We measure performance on the GLUE and SuperGLUE text classification meta-benchmarks:

- sentence acceptability judgement

- sentiment analysis

- paraphrasing/sentence similarity

- natural language inference

- coreference resolution

- sentence completion

- word sentence disambiguation

- question answering

https://super.gluebenchmark.com/

https://super.gluebenchmark.com/

Pre-Training

The model is trained with a maximum likelihood objective (using “teacher forcing”) regardless of the task. To specify which task the model should perform, we add a task-specific prefix to the original input sequence before feeding it to the model.

Note that the choice of text prefix used for a given task is essentially a hyperparameter. We found that changing the exact wording of the prefix had limited impact and so did not perform extensive experiments into different prefix choices. We allow for separately fine-tuning the model on each individual task and use short task prefixes instead of an explicit question-answer format.

We mainly consider models that explicitly process an input with an encoder before generating an output with a separate decoder and we focus on transfer learning rather than zero-shot learning. Our framework also allows for generative tasks like machine translation and abstractive summarization, where it is not possible to enumerate all possible output choices.

During pre-training, we use an “inverse square root” learning rate schedule: 1 / sqrt(max(n, k)) where n is the current training iteration and k is the number of warm-up steps (set to 104 in all of our experiments). This sets a constant learning rate of 0.01 for the first 104 steps, then exponentially decays the learning rate until pre-training is over.

Experiments

To provide a reasonable means of comparison, we consider multiple configurations for our encoder-decoder model. We will refer to the number of layers and parameters in a BERT(BASE) -sized layer stack as L and P, respectively. We will use M to refer to the number of FLOPs required for an L + L-layer encoder-decoder model or L-layer decoder-only model to process a given input-target pair.

In total, we will compare:

- An encoder-decoder model with L layers in the encoder and L layers in the decoder. This model has 2P parameters and a computation cost of M FLOPs.

- An equivalent model, but with parameters shared across the encoder and decoder, resulting in P parameters and an M-FLOP computational cost.

- An encoder-decoder model with L/2 layers each in the encoder and decoder, giving P parameters and an M/2-FLOP cost.

- A decoder-only language model with L layers and P parameters and a resulting computational cost of M FLOPs.

- A decoder-only prefix LM with the same architecture (and thus the same number of parameters and computational cost) but with fully-visible self-attention over the input.

Performance

Further Readings

https://sh-tsang.medium.com/review-t5-text-to-text-transfer-transformer-b3f0f3c07295

https://sh-tsang.medium.com/review-t5-text-to-text-transfer-transformer-b3f0f3c07295

https://towardsdatascience.com/understanding-t5-model-text-to-text-transfer-transformer-model-69ce4c165023

https://towardsdatascience.com/understanding-t5-model-text-to-text-transfer-transformer-model-69ce4c165023